【快乐开源】Paddle Tensor规范化第二期 0-size tensor 支持broadcast_arrays

本文最后更新于 2024-12-11,文章内容可能已经过时。

broadcast_arrays

问题复现

ARRAY_API_TESTS_MODULE=array_api_compat.paddle ARRAY_API_TESTS_SKIP_DTYPES=int8,int16,uint16,uint8,uint32,uint64 ARRAY_API_TESTS_VERSION="2023.12" python -m pytest -s -vvv array_api_tests/test_data_type_functions.py::test_broadcast_arrays执行结果:

=================================== FAILURES ===================================

____________________________ test_broadcast_arrays _____________________________

+ Exception Group Traceback (most recent call last):

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/runner.py", line 341, in from_call

| result: TResult | None = func()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/runner.py", line 242, in <lambda>

| lambda: runtest_hook(item=item, **kwds), when=when, reraise=reraise

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_hooks.py", line 513, in __call__

| return self._hookexec(self.name, self._hookimpls.copy(), kwargs, firstresult)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_manager.py", line 120, in _hookexec

| return self._inner_hookexec(hook_name, methods, kwargs, firstresult)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 182, in _multicall

| return outcome.get_result()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_result.py", line 100, in get_result

| raise exc.with_traceback(exc.__traceback__)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 167, in _multicall

| teardown.throw(outcome._exception)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/threadexception.py", line 92, in pytest_runtest_call

| yield from thread_exception_runtest_hook()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/threadexception.py", line 68, in thread_exception_runtest_hook

| yield

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 167, in _multicall

| teardown.throw(outcome._exception)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/unraisableexception.py", line 95, in pytest_runtest_call

| yield from unraisable_exception_runtest_hook()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/unraisableexception.py", line 70, in unraisable_exception_runtest_hook

| yield

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 167, in _multicall

| teardown.throw(outcome._exception)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/logging.py", line 846, in pytest_runtest_call

| yield from self._runtest_for(item, "call")

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/logging.py", line 829, in _runtest_for

| yield

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 167, in _multicall

| teardown.throw(outcome._exception)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/capture.py", line 880, in pytest_runtest_call

| return (yield)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 167, in _multicall

| teardown.throw(outcome._exception)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/skipping.py", line 257, in pytest_runtest_call

| return (yield)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 103, in _multicall

| res = hook_impl.function(*args)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/runner.py", line 174, in pytest_runtest_call

| item.runtest()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/python.py", line 1627, in runtest

| self.ihook.pytest_pyfunc_call(pyfuncitem=self)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_hooks.py", line 513, in __call__

| return self._hookexec(self.name, self._hookimpls.copy(), kwargs, firstresult)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_manager.py", line 120, in _hookexec

| return self._inner_hookexec(hook_name, methods, kwargs, firstresult)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 139, in _multicall

| raise exception.with_traceback(exception.__traceback__)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/pluggy/_callers.py", line 103, in _multicall

| res = hook_impl.function(*args)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/_pytest/python.py", line 159, in pytest_pyfunc_call

| result = testfunction(**testargs)

| File "/home/array-api-tests/array_api_tests/test_data_type_functions.py", line 66, in test_broadcast_arrays

| shapes=st.integers(1, 5).flatmap(hh.mutually_broadcastable_shapes), data=st.data()

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/hypothesis/core.py", line 1722, in wrapped_test

| raise the_error_hypothesis_found

| exceptiongroup.ExceptionGroup: Hypothesis found 2 distinct failures. (2 sub-exceptions)

+-+---------------- 1 ----------------

| Traceback (most recent call last):

| File "/home/array-api-tests/array_api_tests/test_data_type_functions.py", line 74, in test_broadcast_arrays

| out = xp.broadcast_arrays(*arrays)

| File "/home/array-api-compat/array_api_compat/paddle/_aliases.py", line 904, in broadcast_arrays

| return [paddle.broadcast_to(a, shape) for a in arrays]

| File "/home/array-api-compat/array_api_compat/paddle/_aliases.py", line 904, in <listcomp>

| return [paddle.broadcast_to(a, shape) for a in arrays]

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/paddle/tensor/manipulation.py", line 4714, in broadcast_to

| return expand(x, shape, name)

| File "/root/anaconda3/envs/paddle/lib/python3.10/site-packages/paddle/tensor/manipulation.py", line 4747, in expand

| return _C_ops.expand(x, shape)

| ValueError: (InvalidArgument) The expanded size cannot be zero.

| [Hint: Expected expand_shape[i] != 0, but received expand_shape[i]:0 == 0:0.] (at /paddle/paddle/phi/kernels/impl/expand_kernel_impl.h:43)

|

| Falsifying example: test_broadcast_arrays(

| shapes=((0,),),

| data=data(...),

| )

| Draw 1 (x1): Tensor(shape=[0], dtype=bool, place=Place(cpu), stop_gradient=True,

| [])

+---------------- 2 ----------------

| Traceback (most recent call last):

| File "/home/array-api-tests/array_api_tests/test_data_type_functions.py", line 84, in test_broadcast_arrays

| ph.assert_result_shape(

| File "/home/array-api-tests/array_api_tests/pytest_helpers.py", line 320, in assert_result_shape

| assert out_shape == expected, msg

| AssertionError: out[0].shape=[], but should be () [broadcast_arrays( () )]

| Falsifying example: test_broadcast_arrays(

| shapes=((),),

| data=data(...),

| )

| Draw 1 (x1): Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True,

| False)

+------------------------------------

=============================== warnings summary ===============================

../../root/anaconda3/envs/paddle/lib/python3.10/site-packages/setuptools/command/easy_install.py:41

/root/anaconda3/envs/paddle/lib/python3.10/site-packages/setuptools/command/easy_install.py:41: DeprecationWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html

import pkg_resources

array_api_tests/__init__.py:83

/home/array-api-tests/array_api_tests/__init__.py:83: HypothesisWarning: Could not determine whether module array_api_compat.paddle is an Array API library

xps = array_api.make_strategies_namespace(xp, api_version=api_version)

-- Docs: https://docs.pytest.org/en/stable/how-to/capture-warnings.html

=========================== short test summary info ============================

FAILED array_api_tests/test_data_type_functions.py::test_broadcast_arrays - exceptiongroup.ExceptionGroup: Hypothesis found 2 distinct failures. (2 sub-exceptions)

======================== 1 failed, 2 warnings in 5.01s =========================该算子复用了paddle.broadcast_to broadcast_to-API文档-PaddlePaddle深度学习平台

执行后发现问题出在/root/anaconda3/envs/paddle/lib/python3.10/site-packages/paddle/tensor/manipulation.py 中的expand函数,而expand函数调用的是

_C_ops.expand ,因此我们需要查看expand算子的实现

相关的文件:

paddle/tensor/manipulation.py:expand算子的Python APIpaddle/phi/infermeta/unary.cc:InferMeta函数实现paddle/phi/ops/yaml/ops.yaml:算子yaml配置paddle/phi/ops/yaml/backward.yaml:算子反向传播yaml配置paddle/phi/kernels:kernel实现expand_kernel.hexpand_kernel_impl.h:本例中实际在此实现/cpu/expand_kernel.cc:cpu实现/gpu/expand_kernel.cu:gpu实现/expand_grad_kernel.h:反向传播实现/cpu/expand_grad_kernel.cc:cpu反向传播/gpu/expand_grad_kernel.cu:gpu反向传播

源码解读

unarray.cc

InferMeta是对静态信息的确定,包括输出的形状与数据类型。

void ExpandInferMeta(const MetaTensor& x,

const IntArray& shape,

MetaTensor* out) { //定义一个函数 ExpandInferMeta,它接受一个输入张量 x 的元数据、一个表示目标形状的 IntArray 对象 shape,以及一个用于存储输出张量元数据的 MetaTensor 指针 out。

#define EXPAND_MAX_RANK_SUPPORTED 8 //定义一个宏 EXPAND_MAX_RANK_SUPPORTED,表示支持的最大秩(维度数)为 8。

auto x_dims = x.dims();

auto expand_shape = shape.GetData(); //获取输入张量 x 的维度,并获取 shape 对象中存储的目标形状数据。

if (expand_shape.empty()) {

expand_shape = std::vector<int64_t>(x_dims.size(), -1);

} //如果目标形状 expand_shape 为空,则初始化为一个与输入张量维度相同、所有元素为 -1 的向量。-1 表示对应维度保持不变。

PADDLE_ENFORCE_GE(

expand_shape.size(),

static_cast<size_t>(x_dims.size()),

common::errors::InvalidArgument(

"The number of elements (%d) of 'shape' for "

"expand_v2 op must be greater than or equal to the rank "

"(%d) of the input.",

expand_shape.size(),

static_cast<size_t>(x_dims.size()))); //使用宏确保目标形状 expand_shape 的元素数量不少于输入张量 x 的秩。

PADDLE_ENFORCE_LE(expand_shape.size(),

EXPAND_MAX_RANK_SUPPORTED,

common::errors::InvalidArgument(

"The number of elements (%d) of 'shape' for "

"must not be greater than %d.",

expand_shape.size(),

EXPAND_MAX_RANK_SUPPORTED)); //确保目标形状 expand_shape 的元素数量不超过最大支持秩。

PADDLE_ENFORCE_GE(expand_shape.size(),

0,

common::errors::InvalidArgument(

"The number of elements (%d) of 'shape' for "

"must be a positive integer.",

expand_shape.size())); //确保目标形状 expand_shape 的元素数量为正整数。

int out_rank = expand_shape.size();

const auto& out_shape = [&]() -> std::vector<int64_t> { //定义一个 lambda 函数来计算输出张量的形状,并将其存储在 out_shape 中。

std::vector<int64_t> res = expand_shape;

int x_rank = x_dims.size();

const auto& DealWithMinusOne = [&]() { //定义一个内部 lambda 函数 DealWithMinusOne 来处理 expand_shape 中的 -1 值,表示对应维度保持不变。

for (int x_idx = x_rank - 1, out_idx = out_rank - 1; x_idx >= 0;

x_idx--, out_idx--) {

if (res[out_idx] == -1) {

res[out_idx] = x_dims[x_idx];

}

}

}; //遍历 expand_shape,将 -1 替换为输入张量 x 对应维度的值。

const auto& DealWithMinusTwo = [&]() { //定义另一个内部 lambda 函数 DealWithMinusTwo 来处理 expand_shape 中的 -2 值,表示该维度是一个变量。

// We use -2 to represent the element in expand_shape is a var.

for (int x_idx = x_rank - 1, out_idx = out_rank - 1; out_idx >= 0;

x_idx--, out_idx--) {

if (res[out_idx] == -2) {

res[out_idx] = -1;

}

}

}; //遍历 expand_shape,将 -2 替换为 -1。

DealWithMinusOne();

DealWithMinusTwo();

return res;

}(); //调用内部 lambda 函数,计算输出形状。

out->set_dims(common::make_ddim(out_shape)); //设置输出张量 out 的维度。

out->set_dtype(x.dtype()); //设置输出张量 out 的数据类型,与输入张量 x 相同。

if (out_rank > 0 && out_shape[0] == x_dims[0]) {

out->share_lod(x);

} //如果输出张量的秩大于0且第一个维度与输入张量 x 的第一个维度相同,则共享输入张量 x 的 LOD(Level of Detail)信息。

#undef EXPAND_MAX_RANK_SUPPORTED //结束宏定义。

}

expand_kernel_impl.h

该文件主要实现了cpu下的expand操作

// Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#pragma once

#include <algorithm>

#include <vector>

#include "paddle/phi/kernels/funcs/eigen/common.h"

#include "paddle/phi/kernels/funcs/eigen/eigen_function.h"

#define MAX_RANK_SUPPORTED 8

namespace phi {

using Tensor = DenseTensor;

template <typename Context, typename T, int Rank>

void Expand(const Context& ctx,

const DenseTensor& x,

const IntArray& shape,

DenseTensor* out) { //定义一个模板函数 Expand,它接受上下文 ctx、输入张量 x、目标形状 shape 和输出张量 out。函数模板参数包括上下文类型 Context、数据类型 T 和张量秩 Rank。

auto in_dims = x.dims();

auto expand_shape = shape.GetData();

auto vec_in_dims = common::vectorize<int>(in_dims);

auto diff = expand_shape.size() - vec_in_dims.size();

vec_in_dims.insert(vec_in_dims.begin(), diff, 1);

std::vector<int> repeat_times(vec_in_dims.size()); //获取输入张量 x 的维度,并将其转换为向量。计算目标形状 expand_shape 和输入张量维度之间的差异,并在输入维度向量的前面插入1。

if (Rank == 0) {

phi::Copy<Context>(ctx, x, ctx.GetPlace(), false, out);

return;

} //如果张量秩为0,直接复制输入张量 x 到输出张量 out。

for (size_t i = 0; i < vec_in_dims.size(); ++i) {

PADDLE_ENFORCE_NE(

expand_shape[i],

0,

common::errors::InvalidArgument("The expanded size cannot be zero."));//遍历每个维度,确保目标形状中的值不为0。

if (i < diff) {

PADDLE_ENFORCE_GT(

expand_shape[i],

0,

common::errors::InvalidArgument(

"The expanded size (%d) for non-existing dimensions must be "

"positive for expand_v2 op.",

expand_shape[i]));

repeat_times[i] = expand_shape[i];

} else if (expand_shape[i] > 0) {

if (vec_in_dims[i] != 1) {

PADDLE_ENFORCE_EQ(

vec_in_dims[i],

expand_shape[i],

common::errors::InvalidArgument(

"The value (%d) of the non-singleton dimension does not match"

" the corresponding value (%d) in shape for expand_v2 op.",

vec_in_dims[i],

expand_shape[i]));

repeat_times[i] = 1; //对于存在的维度,如果其值不为1,则必须与目标形状中的值匹配。

} else {

repeat_times[i] = expand_shape[i];

}

} else {

PADDLE_ENFORCE_EQ(

expand_shape[i],

-1,

common::errors::InvalidArgument(

"When the value in shape is negative for expand_v2 op, "

"only -1 is supported, but the value received is %d.",

expand_shape[i]));

repeat_times[i] = 1;

}//如果目标形状中的值为-1,则只支持-1,并将repeat_times[i]设置为1。

}

Eigen::DSizes<Eigen::DenseIndex, Rank> bcast_dims;//初始化一个Eigen的动态尺寸数组bcast_dims,用于后续的广播操作。

for (size_t i = 0; i < repeat_times.size(); ++i) {

bcast_dims[i] = repeat_times[i];

}

DDim new_in_dims = common::make_ddim(vec_in_dims);

DDim out_dims(new_in_dims);

for (size_t i = 0; i < repeat_times.size(); ++i) {

out_dims[i] *= repeat_times[i];

}//计算新的输入维度和输出维度,并调整输出张量out的大小。

out->Resize(out_dims);

auto x0 = EigenTensor<T, Rank>::From(x, new_in_dims);

ctx.template Alloc<T>(out);

out->data<T>();//调整输出张量out的大小,并分配内存。

auto y = EigenTensor<T, Rank>::From(*out, out_dims);

auto& place = *ctx.eigen_device();

// use 32-bit index to speed up

bool use_32bit_index = y.size() < Eigen::NumTraits<int>::highest();

if (use_32bit_index) {

phi::funcs::EigenBroadcast<std::decay_t<decltype(place)>, T, Rank>::Eval(

place, To32BitIndex(y), To32BitIndex(x0), bcast_dims);

} else {

phi::funcs::EigenBroadcast<std::decay_t<decltype(place)>, T, Rank>::Eval(

place, y, x0, bcast_dims);

}

}//根据是否使用32位索引,调用不同的广播函数来扩展张量。

template <typename T, typename Context>

void ExpandKernel(const Context& ctx,

const DenseTensor& x,

const IntArray& shape,

DenseTensor* out) {//定义了一个模板函数ExpandKernel,它接受上下文ctx、输入张量x、目标形状shape和输出张量out。这个函数根据输入张量的秩调用相应的Expand函数。

auto rank = x.dims().size();

PADDLE_ENFORCE_GE(

rank,

0,

common::errors::InvalidArgument(

"The rank of the input 'X' for expand_v2 op must be positive, "

"but the value received is %d.",

rank));

PADDLE_ENFORCE_LE(

rank,

MAX_RANK_SUPPORTED,

common::errors::InvalidArgument(

"The rank of the input 'X' for expand_v2 op must be less than "

"or equal to %d, but the value received is %d.",

MAX_RANK_SUPPORTED,

rank));

auto expand_shape = shape.GetData();

auto shape_size = expand_shape.size();

PADDLE_ENFORCE_GE(

shape_size,

rank,

common::errors::InvalidArgument(

"The number (%d) of elements of 'shape' for expand_v2 op must be "

"greater than or equal to the rank (%d) of the input 'X'.",

shape_size,

rank));

PADDLE_ENFORCE_LE(

shape_size,

MAX_RANK_SUPPORTED,

common::errors::InvalidArgument(

"The number (%d) of elements of 'shape' for expand_v2 op must be "

"less than or equal to %d.",

shape_size,

MAX_RANK_SUPPORTED));

rank = std::max(rank, static_cast<int>(shape_size));

switch (rank) {

case 0:

Expand<Context, T, 0>(ctx, x, shape, out);

break;

case 1:

Expand<Context, T, 1>(ctx, x, shape, out);

break;

case 2:

Expand<Context, T, 2>(ctx, x, shape, out);

break;

case 3:

Expand<Context, T, 3>(ctx, x, shape, out);

break;

case 4:

Expand<Context, T, 4>(ctx, x, shape, out);

break;

case 5:

Expand<Context, T, 5>(ctx, x, shape, out);

break;

case 6:

Expand<Context, T, 6>(ctx, x, shape, out);

break;

case 7:

Expand<Context, T, 7>(ctx, x, shape, out);

break;

case 8:

Expand<Context, T, 8>(ctx, x, shape, out);

break;

}

}

} // namespace phi

修改思路

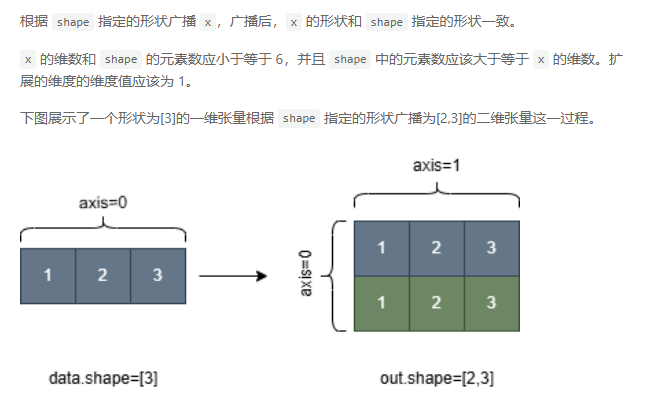

下图是rank=1的input tensor=[1,2,3]在expand_shape=[2,3]的输出。

以rank=1的input tensor=[1,2,3]为例,若expand_shape=[0,3],说明我们需要在第一个维度将tensor压缩掉,那么tensor实际就成为了0-size tensor,因此这种情况我们应该直接输出0-size tensor。

重新编译及安装

cd /paddle/build

time cmake .. -DPY_VERSION=3.10 -DWITH_GPU=OFF -DWITH_TESTING=ON

time make -j$(nproc)

cd /paddle/build/python/dist

pip install -U paddlepaddle-0.0.0-cp310-cp310-linux_x86_64.whl --force-reinstall -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple问题

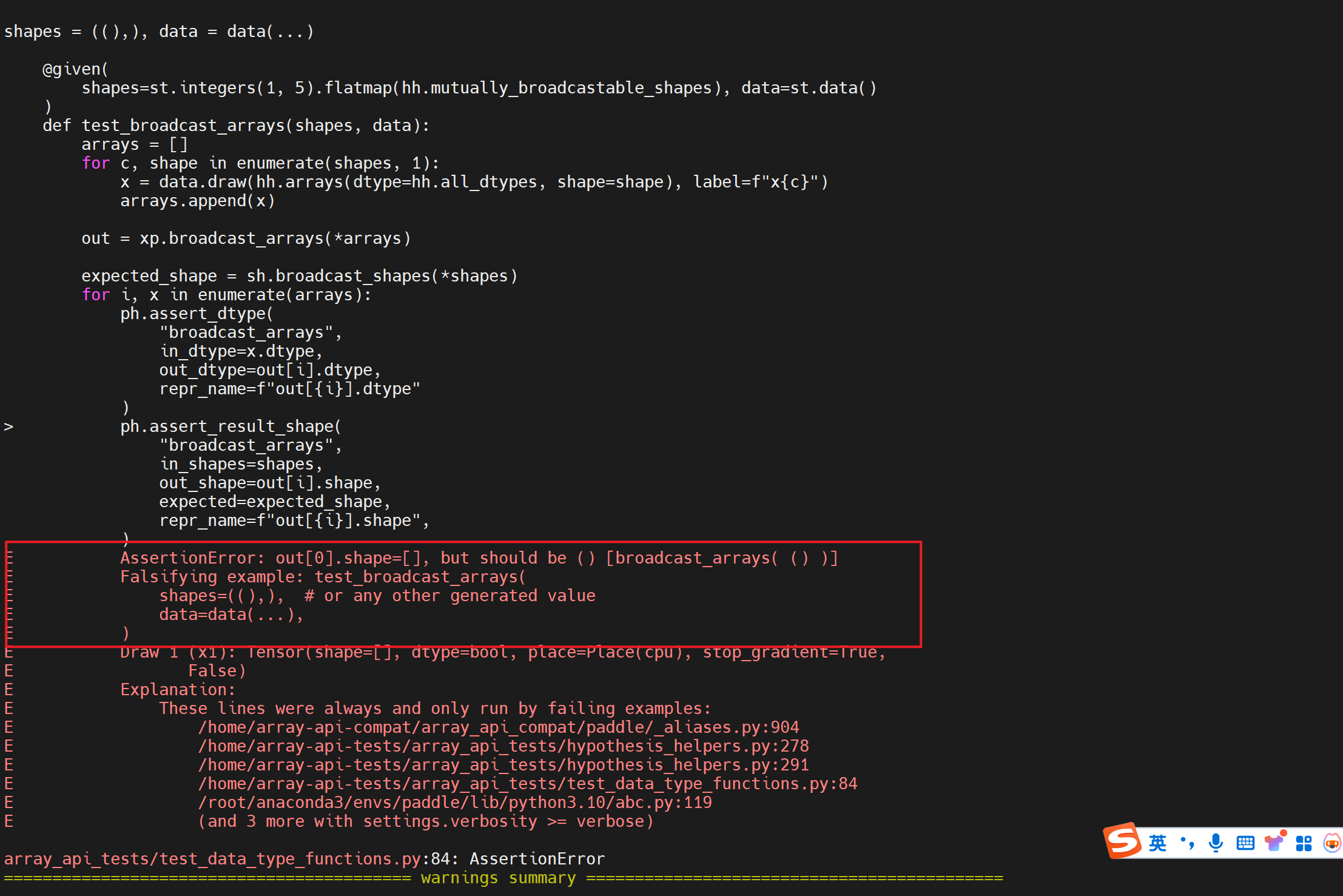

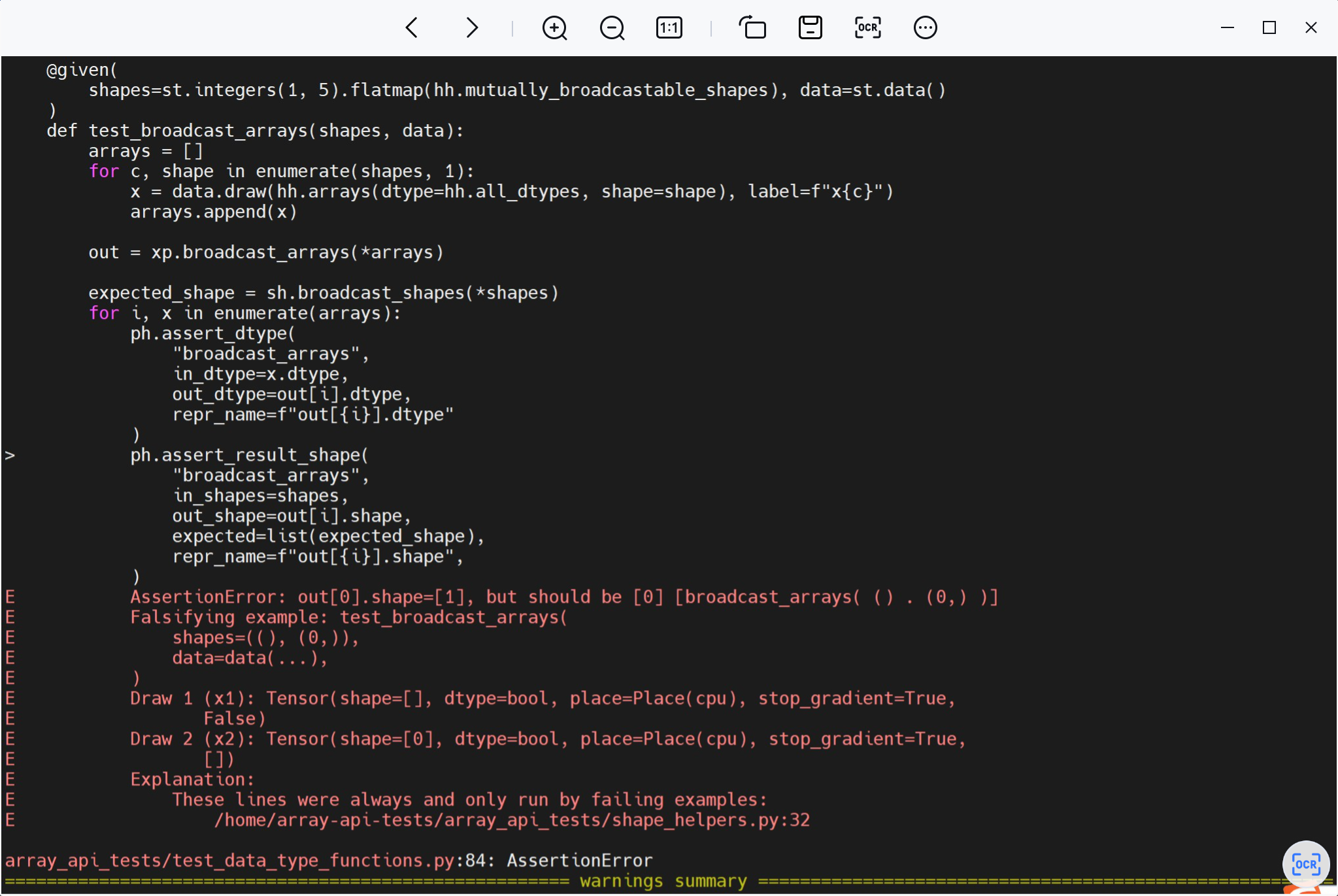

api-test源码类型转换

由于api-test是对numpy进行规范,因此需要修改该处测试代码

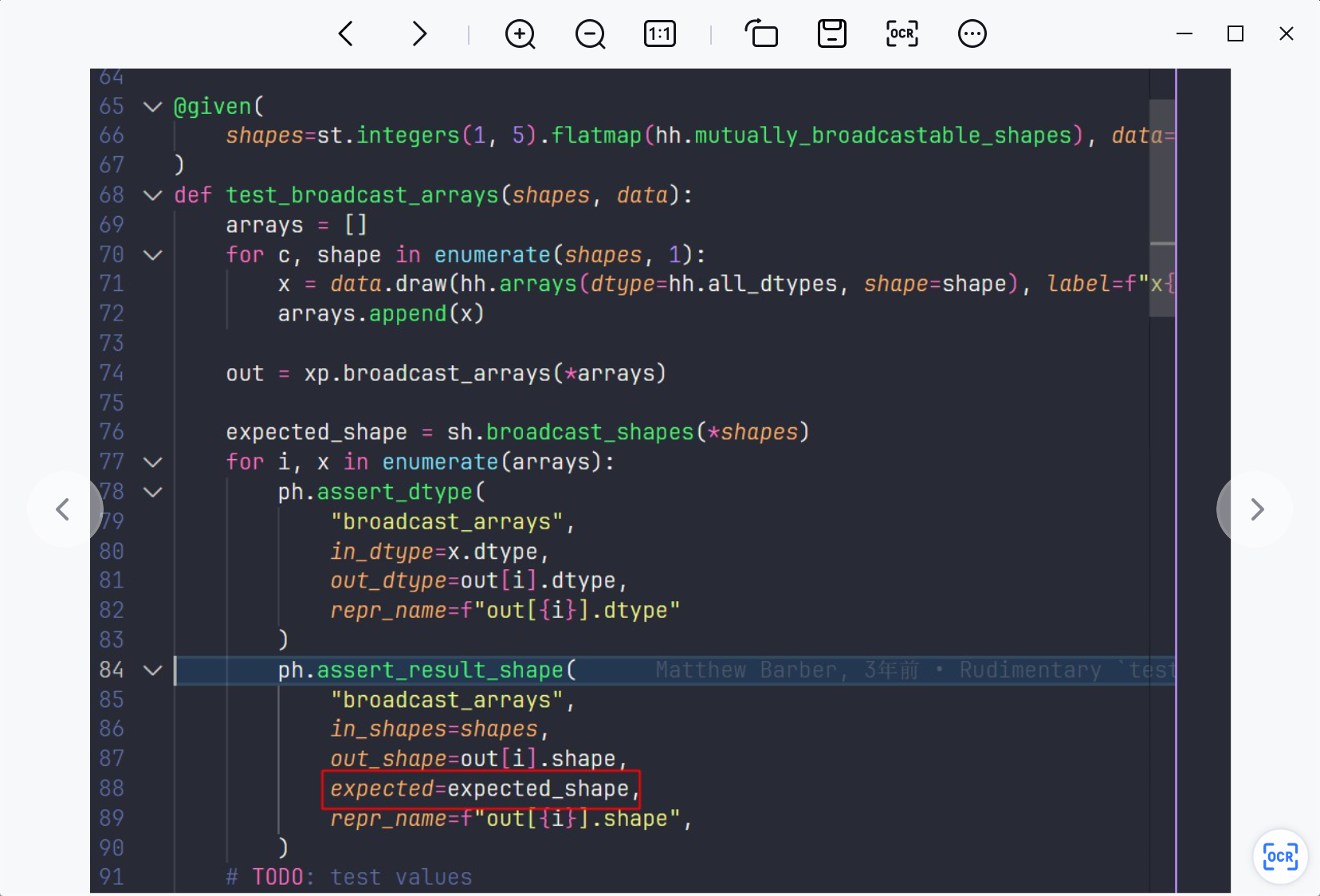

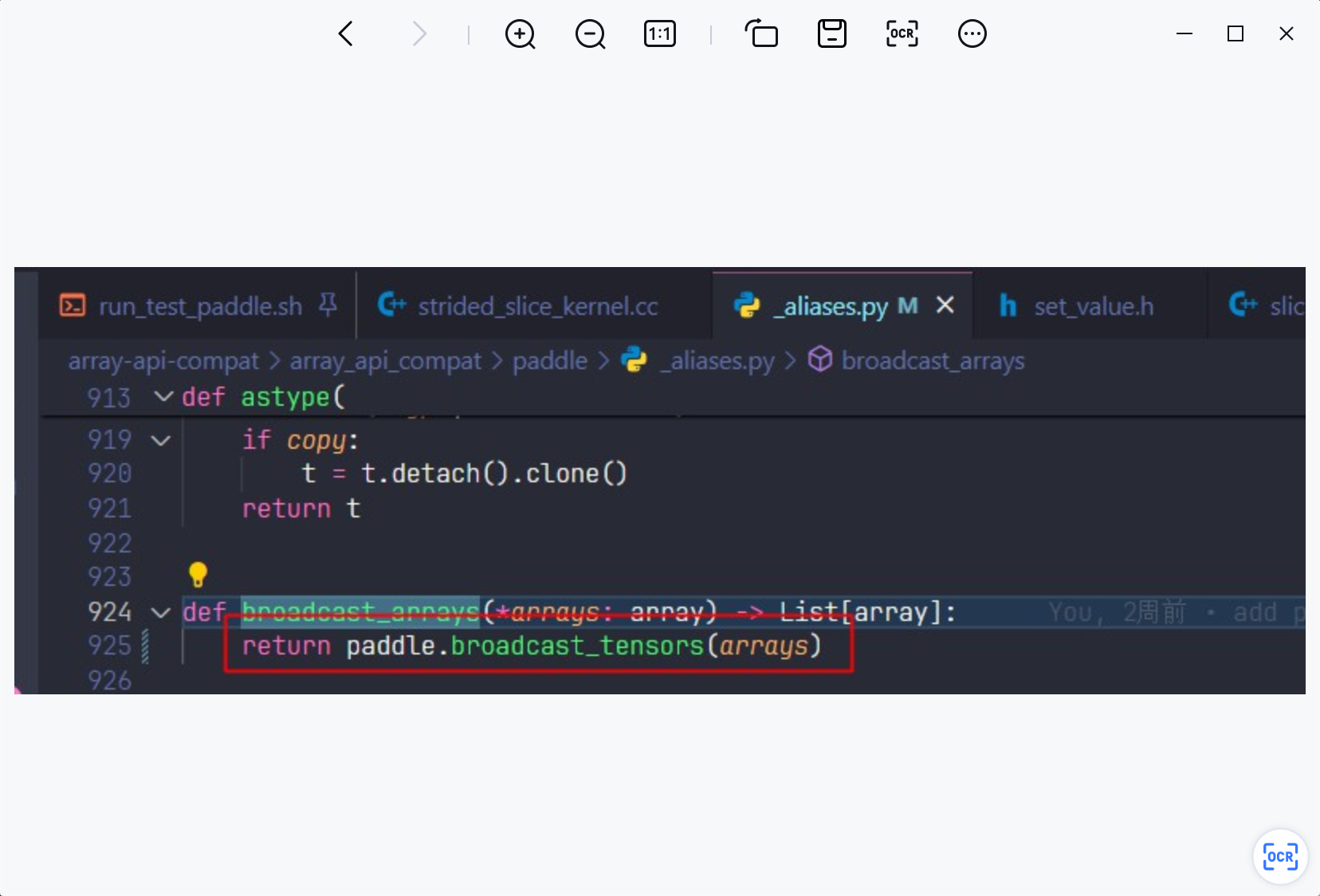

broadcast_arrays输出shape不匹配

此时修改expand后另外一个调用expand的broadcast_to已经pass了,老师给出的回答是测试的时候直接换一个api?

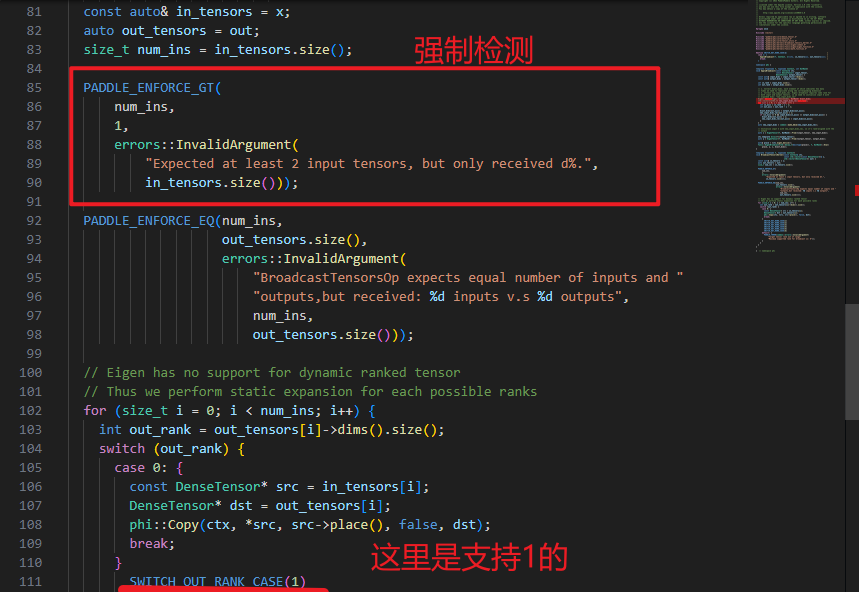

broadcast_tensor single input输入强制检测

这个忘截图了,但是numpy实际上是支持单输入的,paddle也支持,但是上一位写代码的时候加了一个强制检测的宏,导致输入错误。

对应部分修改为GE即可。

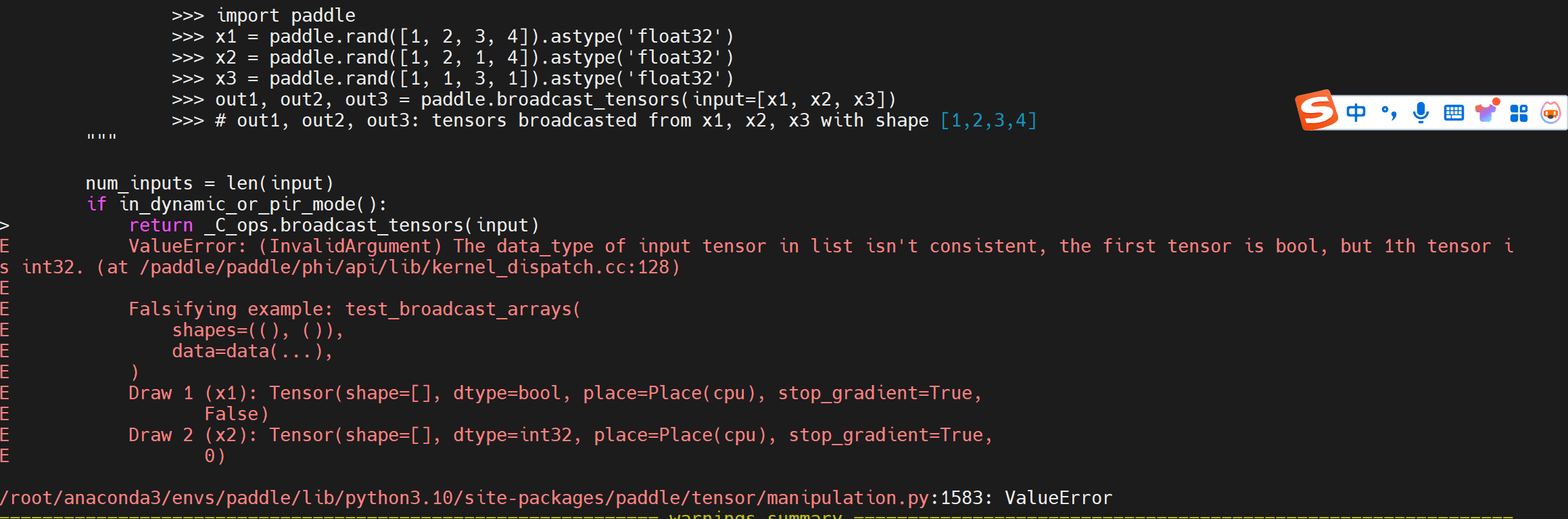

api-test多输入类型不匹配

测试的input由于随机类型,所以需要在底层实现类型的统一?

- 感谢你赐予我前进的力量